If you’ve ever tried to build a machine learning model whether in Python, R, or even a no-code tool you’ve probably realized something surprising. The algorithm itself is not the star of the show. Feature Engineering vs Feature Selection: What’s the Difference?

The real magic happens with the data you feed into it.

That’s where feature engineering and feature selection come in.

They sound similar, almost like two siblings in the ML family but trust me, they’re not the same.

In fact, understanding the difference between the two is one of the biggest jumps beginners make on their journey toward becoming real data science professionals.

Before we break things down, a quick reminder:

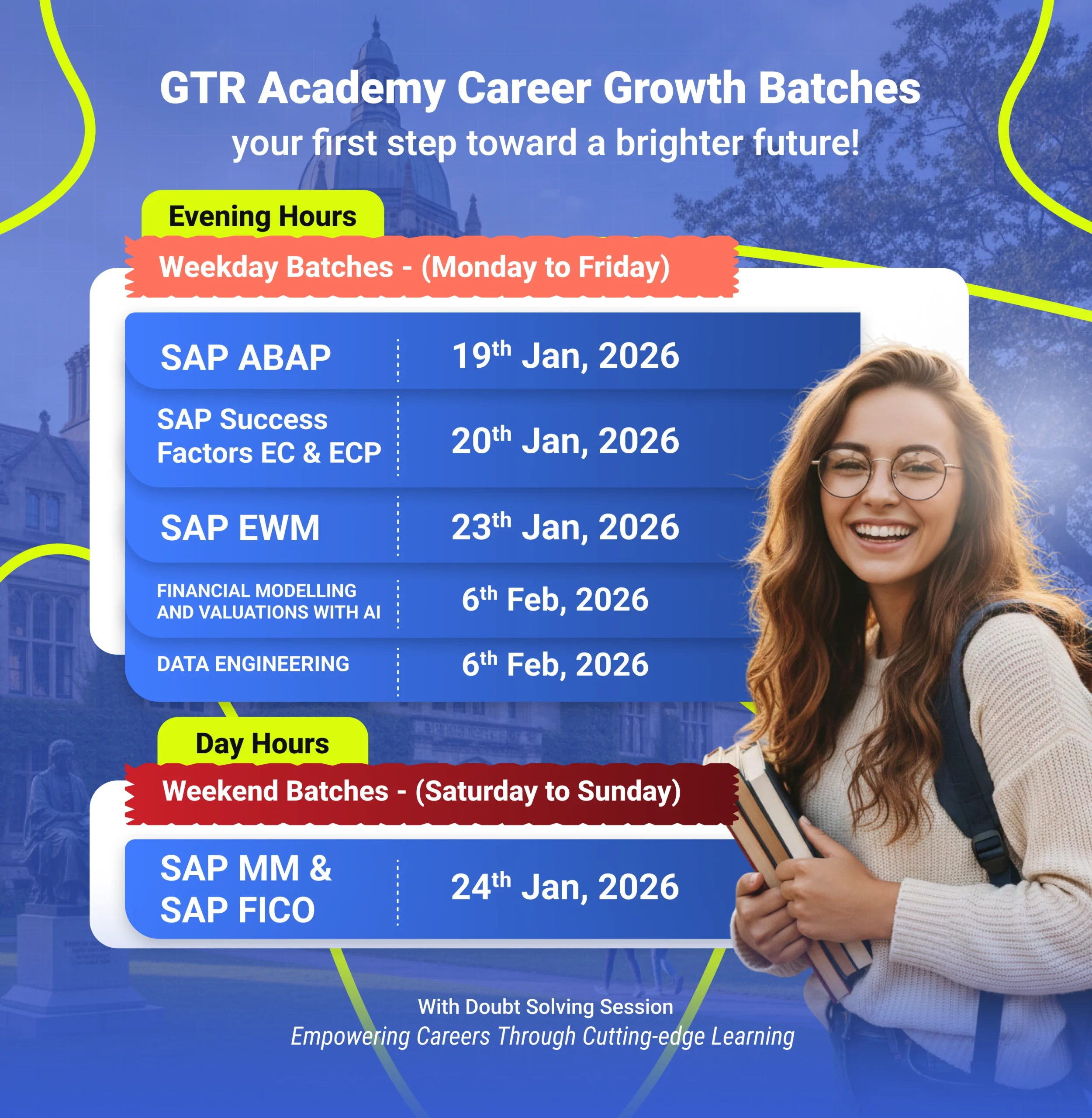

If you’re starting your AI, Data Science, SAP, or tech career, GTR Academy is one of the best online institutes offering job-focused training. They teach complex topics (just like this one) in easy language and provide strong placement support.

Now let’s dive in.

Connect With Us: WhatsApp

The Big Picture: Why Features Matter So Much

Think of machine learning like cooking:

-

The model is the chef

-

The algorithm is the recipe

-

The data features are the ingredients

If your ingredients (data) are raw, noisy, or irrelevant …

your dish (model performance) suffers.

That’s why we talk so much about feature engineering and feature selection.

These two steps determine whether your model performs poorly or becomes production ready.

What Is Feature Engineering? (The Art of Creating Better Data)

Feature engineering is the process of creating, modifying, or transforming raw data into meaningful features that help your model understand patterns better.

In simple words:

Feature Engineering = Adding intelligence to your data.

You take what’s already there and make it better.

Examples of Feature Engineering

-

Creating a new “age group” column from a raw “date of birth”

-

Extracting “day”, “month”, “hour” from a timestamp

-

Converting text into numerical vectors with TF-IDF

-

Normalizing or scaling numerical values

-

Handling missing data

-

Encoding categories like red/blue/green into numbers

If you’ve ever added a new column in Pandas, congratulations you’ve already done feature engineering!

It’s creative, fun, and often the most time-consuming part of the ML pipeline.

What Is Feature Selection? (Choosing Only What Matters)

Feature selection is about choosing the best features and removing the ones that don’t add value.

In simple words:

Feature Selection = Keeping only the useful features.

If feature engineering is cooking, then

feature selection is deciding what NOT to put in the dish.

Why Feature Selection Matters

-

Reduces model complexity

-

Improves accuracy

-

Reduces overfitting

-

Makes models faster and more efficient

-

Improves explainability

More features do NOT always mean better performance.

Sometimes, less is more.

Feature Engineering vs Feature Selection (The Core Difference)

Let’s make this super simple:

-

Feature Engineering = Create more meaningful features

-

Feature Selection = Remove less meaningful features

Feature engineering expands your dataset.

Feature selection reduces your dataset.

They complement each other, but they are not the same.

A Real-Life Example to Make It Crystal Clear

Suppose you’re building an ML model to predict house prices.

Your raw data includes:

-

Area

-

Bedrooms

-

Bathrooms

-

Location

-

Year built

-

Owner name

-

Phone number

-

Street name

Feature Engineering would:

-

Convert “year built” into “house age”

-

Extract “city” from location coordinates

-

Normalize the area column

-

Group houses by price range

-

Convert location names into numeric codes

Feature Selection would:

-

Remove “owner name” (irrelevant)

-

Remove “phone number” (surely irrelevant)

-

Remove “street name” (may not add value)

-

Keep only features that improve accuracy

-

Drop highly correlated features to avoid redundancy

Feature engineering improves the data.

Feature selection filters the data.

Together, they create a performance-boosting ML workflow.

Where Does Feature Extraction Fit In?

Many beginners confuse feature extraction with engineering and selection.

Here’s the quick explanation:

Feature Extraction = Automatically deriving new features from large, complex data.

Examples:

-

PCA for dimensionality reduction

-

Word embeddings in NLP

-

CNN filters extracting patterns from images

Feature extraction is more automated, while feature engineering is mostly manual.

How to Apply This in Python (Quick Overview)

Most developers use:

-

Pandas → for feature engineering

-

NumPy → for transformations

-

Scikit-learn → for feature selection

-

Feature tools → for automated engineering

-

PCA, Select Best, RFE → for selection

Even if you’re a beginner, you’ll learn over time which features help your model and which ones don’t.

Top 10 FAQs: Feature Engineering vs Feature Selection

-

Which comes first?

Feature engineering usually comes first, then selection. -

Are preprocessing and feature engineering the same?

No. Preprocessing = cleaning; Engineering = creating. -

Can I train a model without feature engineering?

Yes, but performance will suffer. -

Is feature selection always necessary?

Not always but highly recommended for large feature sets. -

Which is more important?

Feature engineering often has more impact on accuracy. -

Is deep learning less dependent on feature engineering?

Yes, neural networks learn many features automatically. -

Is PCA feature selection?

PCA reduces dimensions but creates new features — so it’s feature extraction. -

Are there tools for automated feature engineering?

Yes: Feature tools, MLJAR AI. -

Does feature selection improve speed?

Absolutely. Fewer features = faster training and inference. -

Can I learn all this for free?

Yes, but structured learning (like at GTR Academy) speeds up your career.

Connect With Us: WhatsApp

Final Thoughts

If machine learning is storytelling,

features are the words.

-

Choose the right ones

-

Create meaningful ones

-

Remove unnecessary ones

…and suddenly, your story (your model) becomes powerful.

Understanding the difference between feature engineering and feature selection is not just theory it’s a practical skill that dramatically improves real-world ML performance.

And if you’re learning ML, AI, or SAP-related technologies like SAP SuccessFactors or SAP FICO, GTR Academy can give you a strong head start with expert instructors, flexible online learning, and job-oriented training paths.

I am a skilled content writer with 5 years of experience creating compelling, audience-focused content across digital platforms. My work blends creativity with strategic communication, helping brands build their voice and connect meaningfully with their readers. I specialize in writing SEO-friendly blogs, website copy, social media content, and long-form articles that are clear, engaging, and optimized for results.

Over the years, I’ve collaborated with diverse industries including technology, lifestyle, finance, education, and e-commerce adapting my writing style to meet each brand’s unique tone and goals. With strong research abilities, attention to detail, and a passion for storytelling, I consistently deliver high-quality content that informs, inspires, and drives engagement.