LLMs go viral fast, but they also hallucinate, drift, and cost a lot of money. LLM Ops (also called GenAI Ops) applies Mops to language models, and it monitors quality, costs, and safety while enabling safety updates. Here’s the 2026 playbook.

Connect With Us: WhatsApp

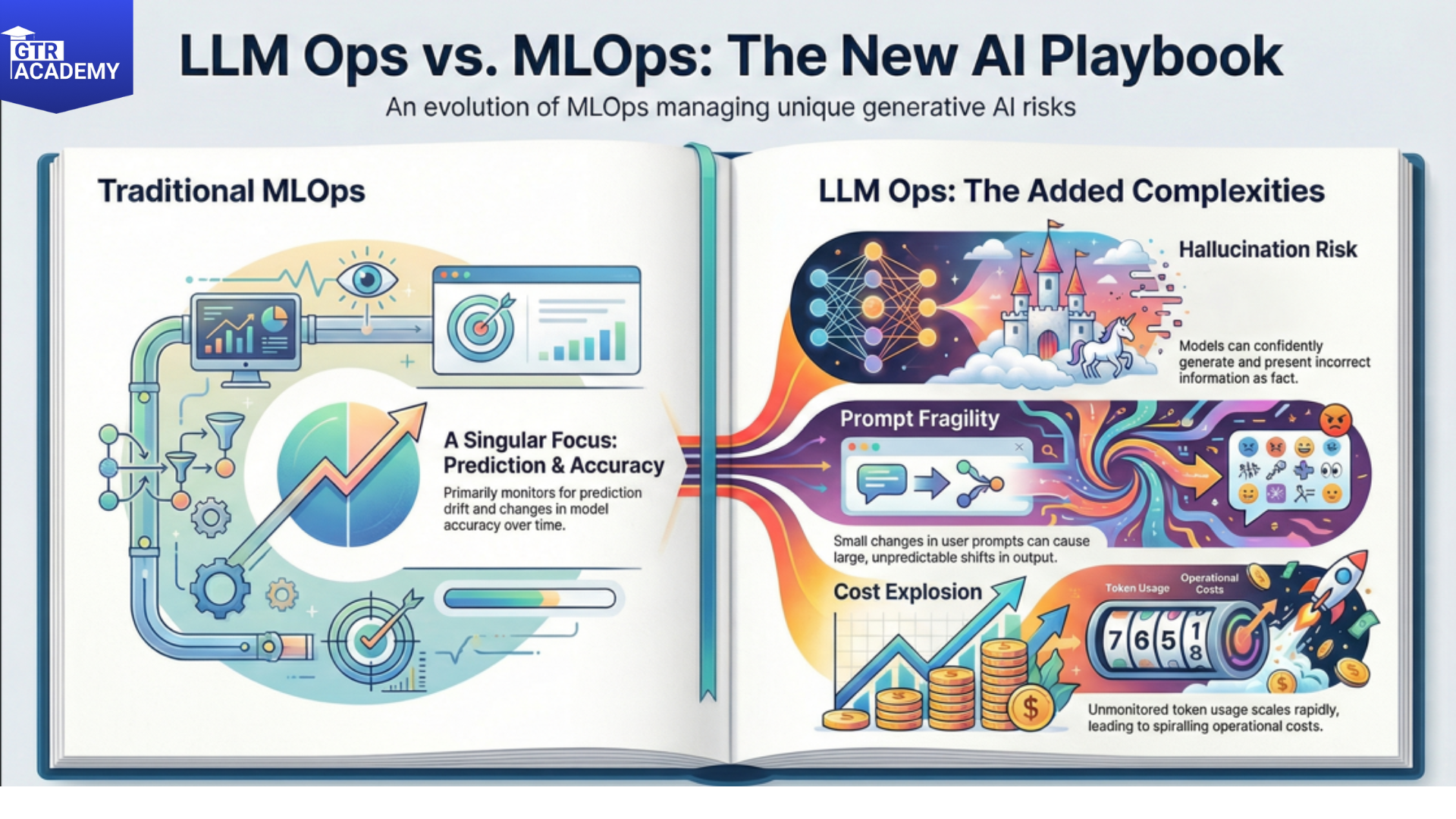

Why LLM Ops is different from traditional Mops

Traditional Machine Learning: monitor prediction drift and accuracy.

LLMs add:

- Hallucination risk: Confidently give wrong answers.

- Prompt fragility: Small changes lead to big output shifts.

- Cost explosion: Token usage scales with adoption.

- Context drift: Retrieved documents or user patterns change.

Teams should have evaluation frameworks and not just mere dashboards.

Core LLM Ops pillars

- Evaluation:

- Human preference: A/B tests or ranking (Hugging Face Open LLM Leaderboard style).

- Automated evaluation: LLM‑as‑judge, regex matching, semantic similarity.

- Custom rubrics: Answer faithfulness, completeness, tone/safety.

- Monitoring:

- Input/ Output drift (embeddings of prompts or responses).

- Latency, token usage, error rates per provider.

- User feedback loops (thumbs up/ thumbs down).

- Experimentation:

- Prompt A/B tests, model/provider comparisons.

- Canary releases (10% traffic → new prompt/version).

Production patterns that work

RAG systems:

Query → Retrieve docs → Augment prompt → LLM → Response + citations

- Monitor: retrieval recall, answer roundedness, citation accuracy.

- Agents/tool calling:

- Track tool success rate, loop length, final resolution.

- Fallbacks for failure modes.

- Fine‑tuned models:

Monitor for catastrophic forgetting, domain drift.

Tooling stack

Start here:

- Eval: Lang Chain eval, Deepavali, Tulins.

- Observability: Phoenix, Lang Smith, Weights & Biases LLM Logger.

- Orchestration: Lang Chain/LlamaIndex with built‑in tracing.

- Cost: OpenAI dashboard + custom token tracking.

- Scaling tip: Instrument everything from day one. Log prompts, retrieved context, tokens, metadata.

Try this: Instrument a simple Q&A RAG app. Log 100 queries, manually eval 20 on a rubric (accuracy, roundedness). Build from there.

Connect With Us: WhatsApp