Feature Stores 101: Centralizing Features for Reuse Across Models?

Developing features consumes a considerable amount of time, yet they frequently represent the most significant factor in determining the performance of the model.

Feature stores address this issue by establishing a single, centralized location to define, compute, store, and serve features that are intended for reuse by various teams and models.

Connect With Us: WhatsApp

What is a feature store?

A feature store is a data infrastructure layer that:

Defines features as reusable assets (code + metadata). Computes features online (real time) and offline (batch). Serves them with low latency to training jobs and inference endpoints.

Handles consistency between training (what the model learned on) and serving (what it gets in production). You should consider it a feature catalogue that can replace the use of feature notebooks and spreadsheets scattered around.

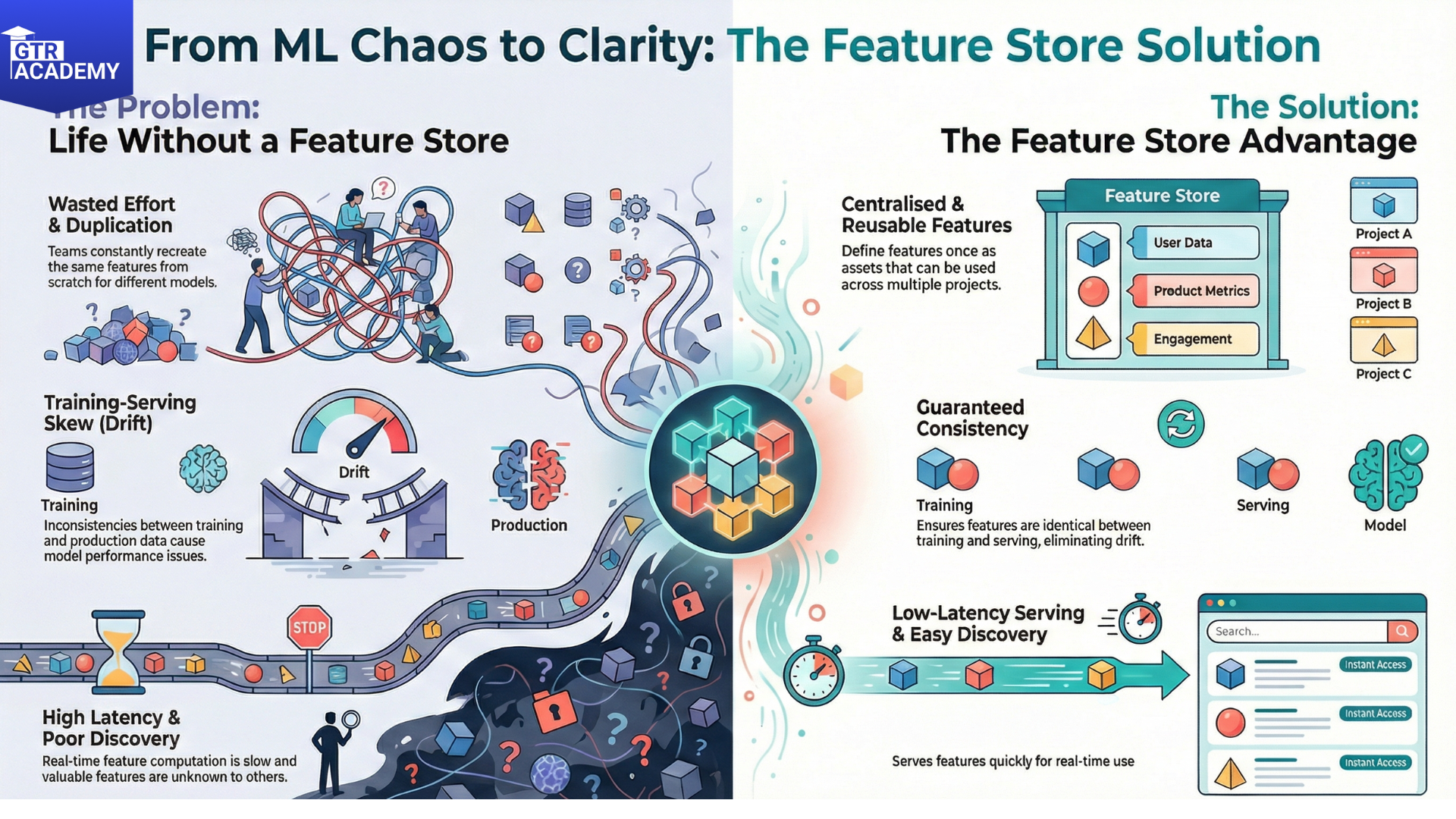

Why feature stores solve real problems If teams don’t have a feature store, they may encounter the following issues:

- Duplication: Each model creates again the same features from scratch (e.g., days of logins, average session length).

- Drift: The features used in training are slightly different from those in serving (e.g., time zone issues, join order).

- Latency: Real time feature computation is slow or impossible.

- Discovery: Commendable features exist, but no one knows about them. With a feature store, data scientists and ML engineers could:

Share and reuse highly accurate features among different models such as fraud, churn, and recommendation. Make changes to the definitions only once, and training/serving will remain in sync. Spend more time on model innovation and less on data plumbing. Core components.

Let me simplify things for you:

Feature definitions: YAML or Python code that describes how to compute each feature (SQL, Spark, Python UDFs).Offline store: For historical feature values used in training (Parquet files, Delta tables).Online store: For low latency serving at prediction time (Cassandra, Redis, DynamoDB).Registry: Metadata, lineage, versioning, and access controls. Feast, Tecon (enterprise), and Hops works are some of the popular open, source options.

Real world example: customer churn features.

In your blog, lay out a concrete example:

- text

- – feature: days sincelastlogin

- source: user events

- logic: dated (current date, max (login date))

- – feature: avg_sessions_per_week_28d

- source: user events

- logic: count(sessions) / 4 over last 28 days

- – feature: high_value_support_tickets_90d

- source: support tickets

- logic: count where severity >= 3

Try this: Think of 5-10 features that you consistently calculate across different models. Write down their definitions and sources this will serve as your very first feature registry, which you can then transfer to a full, fledged store.

For more hands-on guides to modern ML infrastructure, subscribe to our daily series and check the website for upcoming ML Ops templates and notebooks.