How Product Teams Use Data Science: From Funnel Analytics to Growth Loops 2026?

Data science in product isn’t about bleeding‑edge models, but it is about answering “Did the change moved our north star metric?” Product teams rely on funnel analysis, experimentation, and cohort insights to ship faster and retain better. Here’s the playbook.

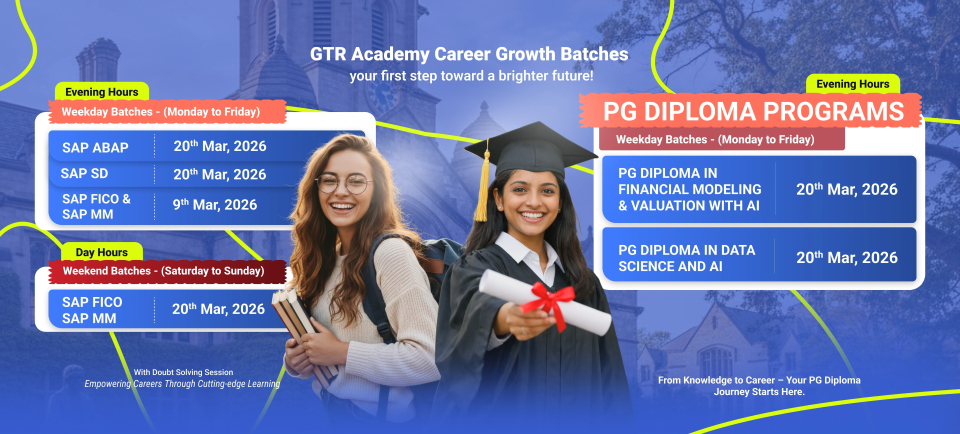

Connect With Us: WhatsApp

Funnels: Where users actually drop

Every product has a conversion funnel:

Awareness → Trial → Activation → Retention → Revenue → Referral/NPS

Data Science value added:

- Leakage detection: Heatmaps showing drop‑off by cohort, device, acquisition source.

- Time to Value: Days from signup to first success metric (DAU, first purchase).

- Micro‑conversions: Proxy events predicting activation (tutorial completion, first share).

Example insight: “Signup to activation drops 25% on mobile test simplified onboarding.”

Experimentation: Beyond the basic A/B testing

A/B testing cadence is as follows:

- PMs hypothesize; Data Science designs (sample size, power, metrics).

- Multi‑armed bandits for ongoing optimization.

- Sequential testing to stop early when confident.

- Holdouts for long‑term effects (90d retention).

Data prevents disasters: p‑hacking, multiple testing, Simpson’s paradox.

Cohorts and growth loops

Retention cohorts reveal reality:

- Week 0: 100%

- Week 1: 40%

- Week 4: 20%

- Week 12: 8%

Slice by channel, feature flag, pricing tier. DS automates and alerts on drops.

Growth loops (k > 1.0 wins):

- Viral: invites → signups (Dropbox).

- Content: blog → leads → customers.

- Product: usage → sharing → acquisition.

DS measures loop velocity, attribution, saturation.

Data Science + product partnership patterns

What works:

- Embedded Data science: One analyst per product squad.

- Self‑serve layer: DBT marts + Looker for PM exploration.

- Experimentation platform: Integrated stats engine + warehouse.

- Weekly rituals: Hypothesis reviews, post‑mortem metric deep‑dives.

Try this: Build your product’s D7 retention cohort table by acquisition source. Biggest drop? That’s PM’s next experiment.