Bias vs Variance in Machine Learning: A Simple Intuition with Visuals 2025

Have you ever felt torn between choosing a model that is too rigid and one that is too sensitive? Welcome to the world of Bias vs Variance in Machine Learning: A Simple Intuition with Visuals the ongoing balancing act that every machine learning expert deals with. If you’ve been struggling to grasp what these terms truly mean, you’re in the right place. Let’s break it down simply.

Connect With Us: WhatsApp

The Real Problem No One Addresses

When we create machine learning models, we aim to capture the true pattern in our data. However, this isn’t straightforward. Our models can fail in two different ways, and understanding these failures is key to improving your skills.

Imagine you are learning to throw darts. Your goal is to hit the center of the dartboard consistently. Some people might always throw to the left side of the board, regardless of how many times they try. Others might throw all over the place, occasionally hitting the center but often missing wildly. The first person has a bias problem, while the second has a variance problem. In machine learning, we encounter similar challenges.

Bias vs Variance in Machine Learning really?

Bias is like having a blind spot. It’s when your model makes overly simplistic assumptions about the data and consistently predicts incorrectly, no matter how often you train it.

Think about fitting a straight line to data that is actually curved. No matter how much training data you provide, that straight line will always miss the mark. This is high bias. Your model is too rigid and overly confident in its incorrect assumptions. It underfits the data, meaning it fails to capture the actual complexity of the problem.

High bias usually arises from models that are too simple.

A linear regression model trying to tackle a nonlinear problem? High bias.

Decision trees with only one split? Same issue.

The model just doesn’t have enough complexity to learn the real pattern.

Understanding Variance in Machine Learning Models

Now let’s look at variance. Variance is like having a wandering mind your model picks up on every little noise and change in the training data, treating random noise as if it were a real pattern.

Imagine fitting a highly wiggly, complex curve to data points. It touches every single data point perfectly, but when you test it on new data, it performs poorly. Your model learned the training data so well that it also learned the noise. This is high variance, and it leads to overfitting.

A complex model like a deep decision tree or a neural network with too many parameters can easily fall into this trap. Each time you tweak the training data slightly, your model’s predictions change dramatically.

The Bias-Variance Trade-Off Explained

Here is where things get interesting. You can’t have zero bias and zero variance at the same time. They work against each other, creating the well-known bias-variance trade-off.

Increasing model complexity initially improves both bias and variance. However, after a point, variance starts increasing while bias decreases. That’s when overfitting begins.

The sweet spot the point where total error is minimized lies in the middle. It’s all about balancing both.

Visualizing the Target Analogy

Picture a target with concentric circles. Each shot represents a prediction made by your model using different training data samples.

-

High bias, low variance: Shots are tightly clustered but far from the center.

-

High variance, low bias: Shots are scattered everywhere.

-

Low bias, low variance: Shots land near the center consistently the ideal model.

How to Spot These Problems in Your Model?

High Bias Symptoms (Underfitting)

Are you getting too many wrong predictions? That’s bias speaking. Your model is underfitting.

Solutions:

-

Add model complexity

-

Use more features

-

Switch to a more powerful algorithm

-

Reduce regularization

High Variance Symptoms (Overfitting)

Is your training accuracy 99% but test accuracy 70%? This is high variance.

Solutions:

-

Apply regularization (L1, L2, dropout)

-

Add more training data

-

Simplify the model

-

Use ensemble methods like bagging or boosting

Addressing High Bias in Your Models

If your model suffers from high bias (underfitting), add complexity. Use advanced algorithms, engineer more features, or gather more relevant data. Reducing regularization may also help.

Tackling High Variance Issues

High variance requires regularization. Adding more training data, simplifying your model, or using ensemble methods can significantly reduce variance.

Real-World Applications for Your Systems

Understanding bias and variance helps avoid mistakes like overfitting or underfitting when working on real-world problems such as:

-

Predicting house prices

-

Image classification

-

Sales forecasting

-

Demand prediction

-

Fraud detection

This knowledge helps you design smarter, more accurate models.

Top 10 FAQs About Bias and Variance

Q1: Can I have zero bias and zero variance at the same time?

No. Reducing one usually increases the other.

Q2: Which is worse high bias or high variance?

Both are problematic, but high bias often means your model can’t capture the problem at all.

Q3: How do I measure bias and variance separately?

You can’t measure them directly. You diagnose them through performance differences.

Q4: Does more data always reduce variance?

Generally, yes.

Q5: Can a model have high bias and high variance?

Rarely, but possible in poorly constructed models.

Q6: What is the relationship between bias, variance, and model complexity?

As complexity increases, bias decreases while variance increases.

Q7: Is regularization only for reducing variance?

Mostly yes, though it may impact bias too.

Q8: How do ensemble methods help with bias-variance?

They reduce variance by averaging predictions.

Q9: Can deep learning models overcome the trade-off?

They still face the trade-off, though with enough data they perform well.

Q10: Why is this important?

It helps you understand why models fail and how to fix them.

Learn More About Machine Learning Excellence

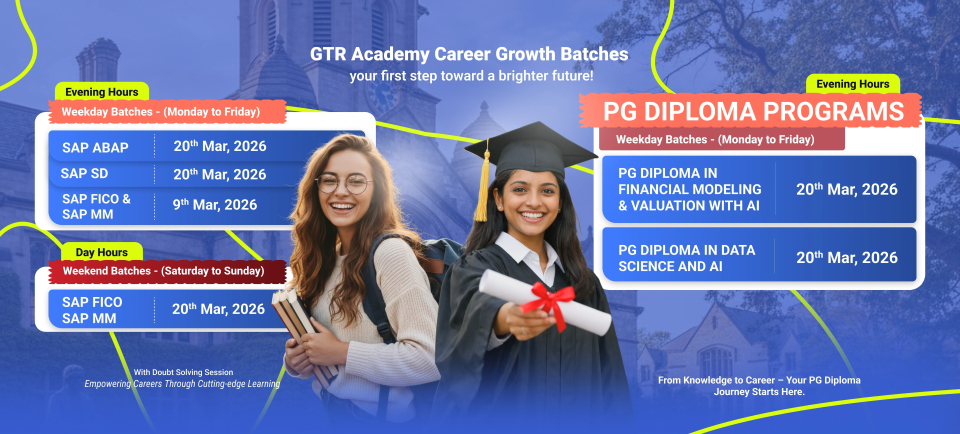

If you’re serious about learning machine learning and related skills, GTR Academy offers some of the best online courses for SAP and data science. GTR Academy provides structured learning paths, industry expert instructors, and hands-on projects that connect theory to practice.

Whether you’re studying SAP, advanced analytics, or diving deeper into machine learning algorithms, GTR Academy’s quality courses combine valuable content with practical application exactly what you need to succeed in today’s competitive tech world.

Connect With Us: WhatsApp

Final Thoughts

The bias-variance trade-off is something to understand not to fear. It helps you build models strategically instead of by guessing.

Start observing training vs test accuracy. Ask yourself whether your model is too rigid or too flexible. This simple awareness can dramatically elevate your machine learning skills.

Happy modelling!

I am a skilled content writer with 5 years of experience creating compelling, audience-focused content across digital platforms. My work blends creativity with strategic communication, helping brands build their voice and connect meaningfully with their readers. I specialize in writing SEO-friendly blogs, website copy, social media content, and long-form articles that are clear, engaging, and optimized for results.

Over the years, I’ve collaborated with diverse industries including technology, lifestyle, finance, education, and e-commerce adapting my writing style to meet each brand’s unique tone and goals. With strong research abilities, attention to detail, and a passion for storytelling, I consistently deliver high-quality content that informs, inspires, and drives engagement.