Decisions with data, not just with their gut feeling or opinions.

It is a controlled way of answering the question A/B Testing “Did the change really improve the situation?” with numbers.

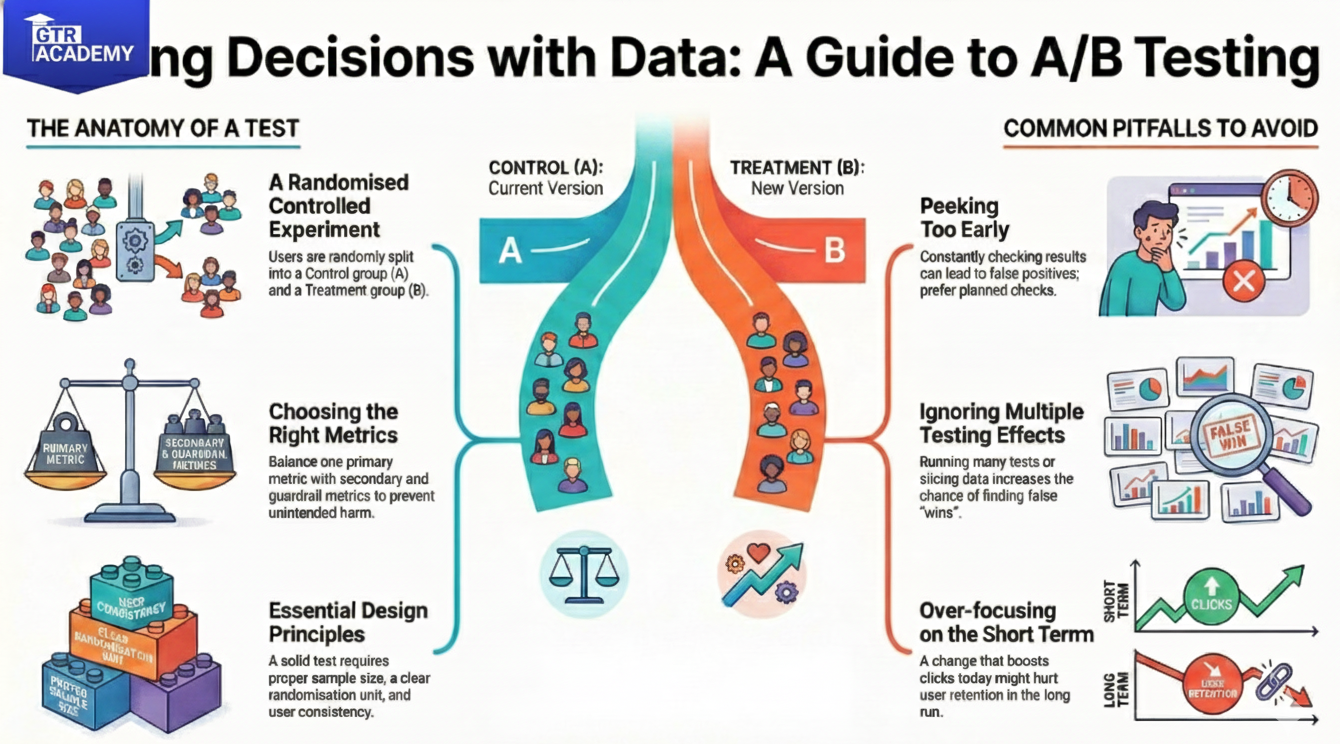

What is an A/B test? Basically, an A/B test is a randomized controlled experiment: You divide users (or sessions) into at least two groups:

- Control (A): experiences the current version.

- Treatment (B): experiences the new variant (e.g., a new layout, algorithm, or message).

You measure a metric (conversion rate, click through, revenue per user) for both groups. You determine by statistical tests whether the observed differences are due to the change rather than random noise with some confidence. Randomization is the key; it makes sure that groups are similar on average so any differences in outcomes can be attributed to the change.

Connect With Us: WhatsApp

Choosing metrics and guardrails to keep the blog practical, focus on metric design:

- Primary metric (north star for the experiment) Example: signup conversion rate, checkout completion, retention after N days.

- Secondary metrics Indicative signals like click through, time on task, or feature usage.

- Guardrail metrics safety checks that should not deteriorate significantly (for instance, error rate, latency, churn, complaint volume).

- One good experiment has one obvious decision metric plus a few guardrails to be sure that “winning” changes do not result in user experience or long, term value losses.

- Experiment design basics Mention key design choices: Sample size and duration Use power calculations to figure out how many users are necessary to detect a meaningful lift; stopping too early when the results are noisy should be avoided.

- Randomization unit It can be user, session, or account, depending on the case; cross contamination should be avoided (for instance, the same user sees both variants unintentionally).

- Consistency Make sure that users are assigned to the same variant throughout the experiment (“sticky” assignment). A lot of this is taken care of by modern experimentation platforms but knowing the basics allows teams to pose the right questions.

- Common pitfalls and better habits Mention a few frequent mistakes: Peeking too early Always looking at p values and stopping as soon as they look good greatly increases the number of false positives.

- Planned checks or sequential testing methods should be used instead. Multiple testing without correction Performing a large number of experiments or slicing results by many dimensions without taking precautions increases the chances of spurious wins; one should use corrections or pre, registered analyses.

- Over focusing on short term metrics. The one that increases clicks this week might decrease retention next week; thus, one should consider holdback groups or longer, term follow, ups. In upcoming posts, well show how to run a simple A/B end to end from hypothesis and sample size calculation to analysis in Python so you can bring rigorous experimentation into your product decisions.