Black box models have the potential to deliver impressive results; however, they tend to be unreliable, difficult to debug, and problematic to deploy in regulated sectors. By using Explainable AI (XAI) tools such as SHAP and LIME, these models become transparent and hence more understandable, since one can then see the reasons that led to a specific prediction and not just the prediction itself.

Connect With Us: WhatsApp

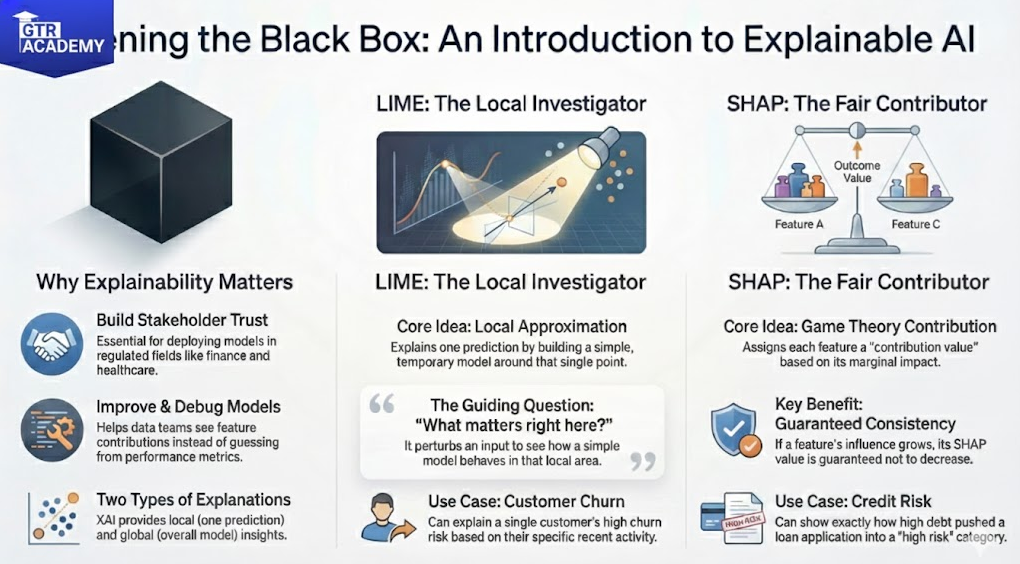

Why Explainability Matters

In numerous real, life scenarios, such as decisions on credits, medical risk scores, churn models, or fraud detection, the stakeholders require an understanding of the model’s behavior in order to give the green light to the deployment. They are especially interested in questions like –

- Which factors led to this decision? and

- Is the model biased?

Besides, Explainability is a great help to the data teams as well: debugging, upgrading, and keeping track of the models become significantly easier when you have a clear view of how features are contributing to the predictions instead of simply speculating based on the metrics.

Local vs Global Explanations

Usually, XAI methods address two sets of questions that complement each other:

- Global explanations: Which features, on average, have the most significant impact on the predictions? Global explanations allow for business understanding as well as model governance.,

- Local explanations: For this particular prediction, which features indicate the outcome? Local explanations are essential when giving a rationale for individual decisions to customers or internal reviewers.

- In essence, SHAP and LIME are quite similar in the sense that they aim at local explanations; however, they also uncover global patterns when aggregated over a large number of samples.

- LIME in Plain Language LIME (Local Interpretable Model, agnostic Explanations) explains a single prediction by replacing the complex model with a simple, interpretable one, which is valid just for the neighborhood of that instance.

Simply:, Consider the instance that you want to explain and generate a multitude of slightly altered versions of it., Get the predictions for these altered samples from the black, box model., Locally, create a simple model (e.g., a sparse linear model) which imitates the black box., Your explanation is the simple models coefficients.

One could say: LIME creates a small linear regression around one point to tell you what is important right here? SHAP: Shapley Values for Feature Contributions SHAP (Shapley Additive explanations) draws on the concepts of cooperative game theory.

It assigns each feature a contribution to a prediction by averaging its marginal impact across all possible subsets of features. Several characteristics make SHAP very appealing:

- Consistency: The SHAP value for a feature will not decrease if the features influence in the model increases.,

- Additivity: Prediction equals the sum of feature contributions plus a baseline. In practice, SHAP delivers:

Explanations for each row (waterfall or force plots) indicating how the features move the prediction away from the baseline., Global summaries (bee swarm and bar plots) revealing the overall most important features and their relationship with the target.

Connect With Us: WhatsApp

How SHAP and LIME Complement Each Other

Real world scenarios:

- Credit risk model: Employ SHAP to demonstrate that the loan application was pushed towards high risk mainly due to a high debt, to, income ratio and recent delinquencies, whereas the stable income helped to lower the risk.,

- Churn prediction: Use LIME to explain the churn probability of a single customer based on reduced usage, negative support interactions, and plan changes.,

- Pricing and revenue: Adopt SHAP global plots to reveal that a discount feature is dominating predictions more than expected, thus indicating a potential business rule issue.