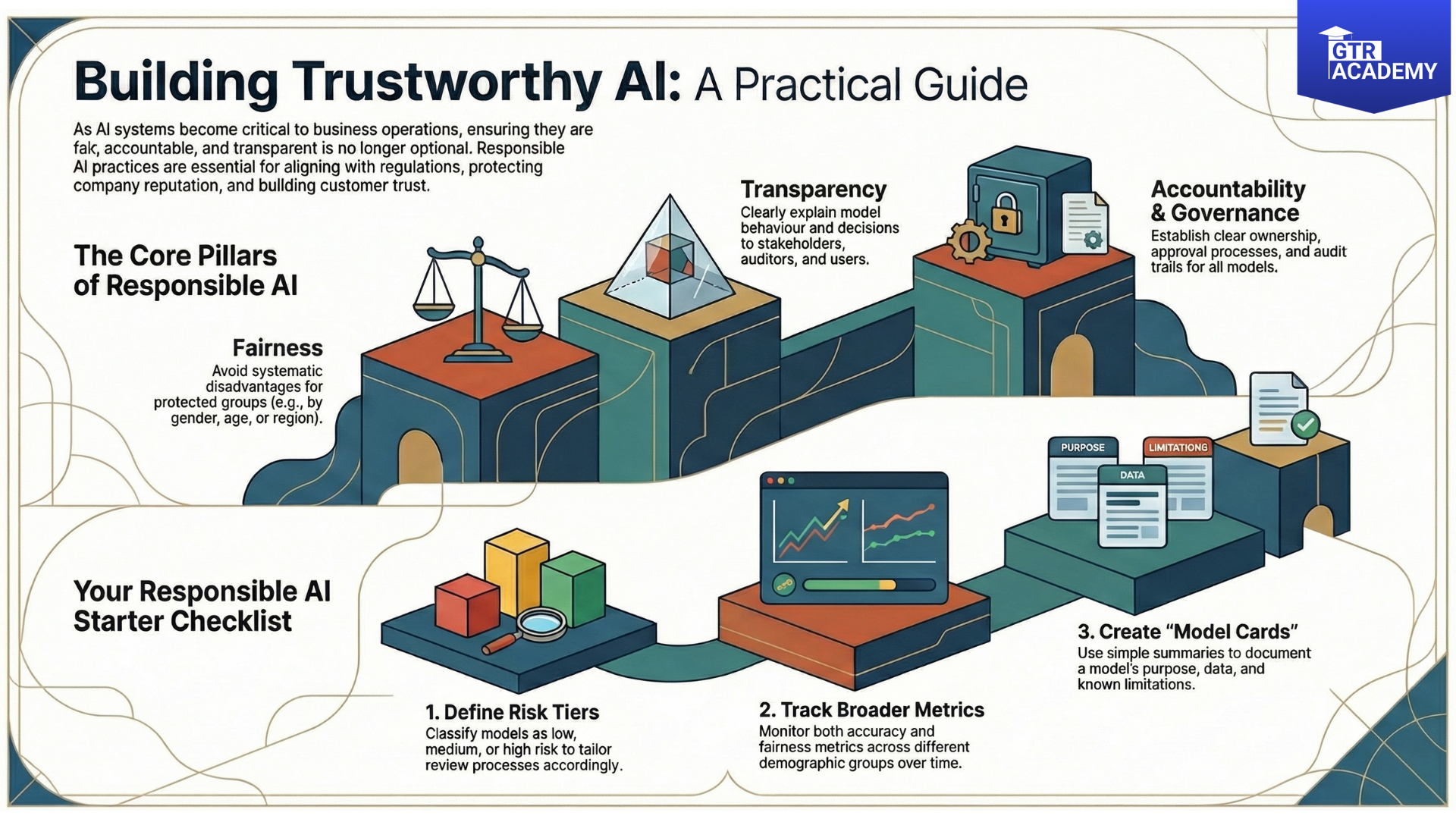

As AI systems escalate from mere prototypes to critical workflows integral to business, the issues of fairness, accountability, and compliance cease to be optional. Responsible AI entails the designing, deploying, and monitoring of models not just for their accuracy, but also to ensure they are safe, explainable, and conform to regulations.

Connect With Us: WhatsApp

The scope of responsible AI

Responsible AI encompasses various dimensions, which the content of your blog can be:

- Fairness: Refraining from the creation of systematic disadvantages of protected groups (for instance, by gender, age or region).

- Transparency: The capability to explain model behavior and decisions to stakeholders, auditors, and affected users.

- Accountability and governance: Model and data clear ownership, approval processes, documentation, and audit trails.

The above three pillars connect technical practices (metrics, monitoring, explainability) with organizational processes (policies, roles, review boards).

Why it would be even more significant by 2025-26

The last couple of years have been marked by increased regulatory and industry pressure: wars on data protection, laws and regulations of various industries (finance, health, HR), AI regulation readiness, all demanding more than “good results on my test set”.

- Numerous organizations now require: Model risk assessments and impact analyses prior to deployment.

- Human involvement for approval of high, stake decisions. Clear documentation of data sources, assumptions, and limitations.

- Going beyond just compliance, responsible AI is also a safeguard for trust and reputation: a single biased model or a poorly governed rollout can shake customer confidence for a long time.

- Steps that teams can implement right now to make the blog more actionable, draft a simple starter checklist: Establish risk tiers for models (low, medium, high) and determine review rigor accordingly.

- Do not only report accuracy but also fairness metrics (e.g., performance by group) and keep track of them over time. Employ explainability tools (feature importance, SHAP/LIME) in review processes, particularly for models of high impact. Develop brief “model cards” that outline purpose, data, metrics, and known caveats.